Abstract

Amazon S3 has become the de facto cloud hard drive—scalable, durable, and cost-effective for ETL, OLAP, and archival workloads.

However, as workloads shift toward training, inference, and agentic AI, S3's original assumptions begin to show limits. These use cases may require:

- Sub-millisecond or low-single-digit millisecond latency (e.g., for agentic memory, feature stores, RAGs)

- Bursty and highly concurrent writes (e.g., for data preprocessing)

- Advanced semantics like append writes (e.g., for write-ahead logs for OLTP)

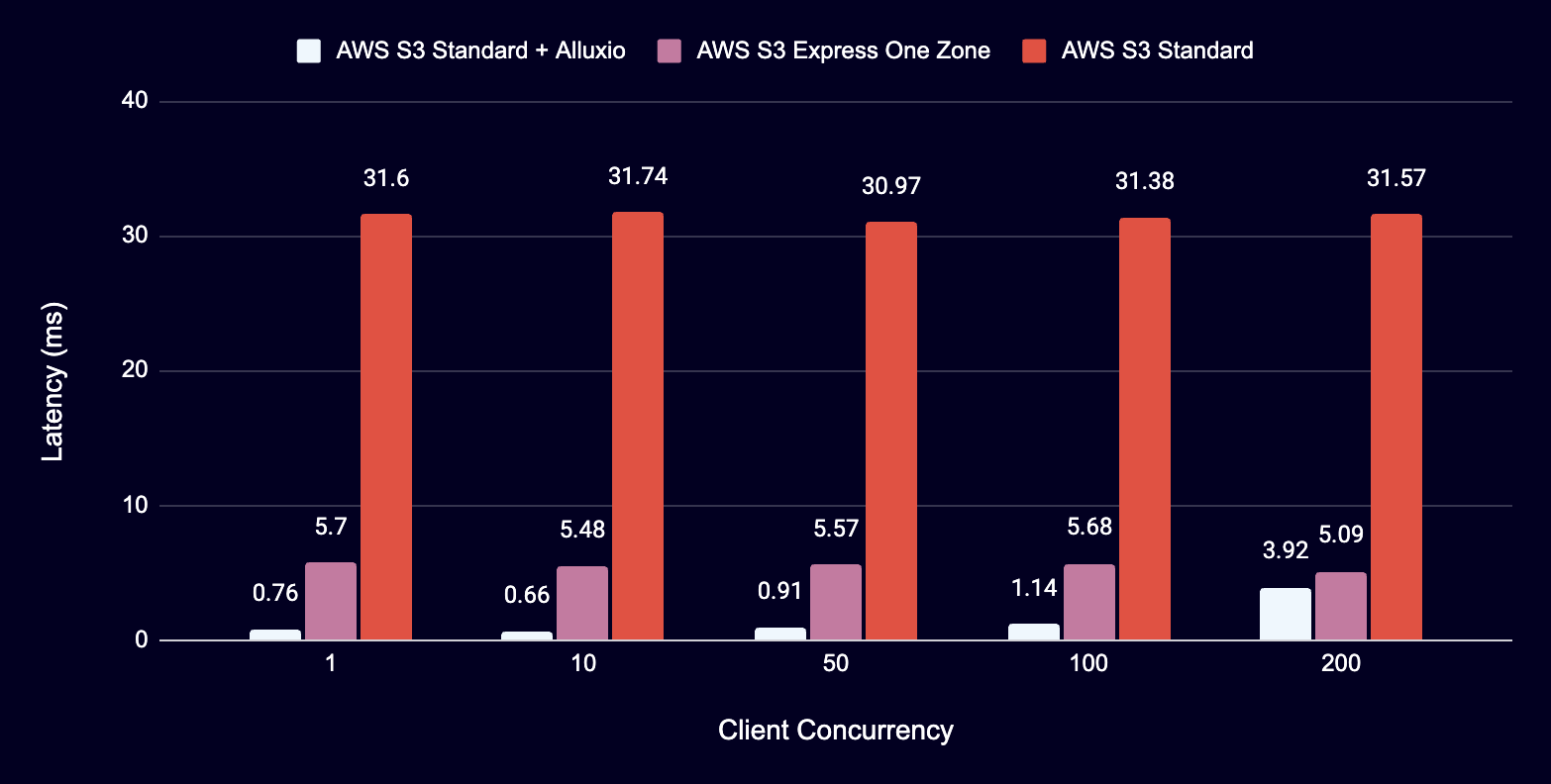

AWS offers high-performance managed filesystems like FSx for Lustre for like FSx for POSIX-compatibility and premium object stores like S3 Express One Zone (also known as S3 directory bucket) for ultra-low latency. But both come with trade-offs: higher cost, provisioning complexity, and possible data migration..

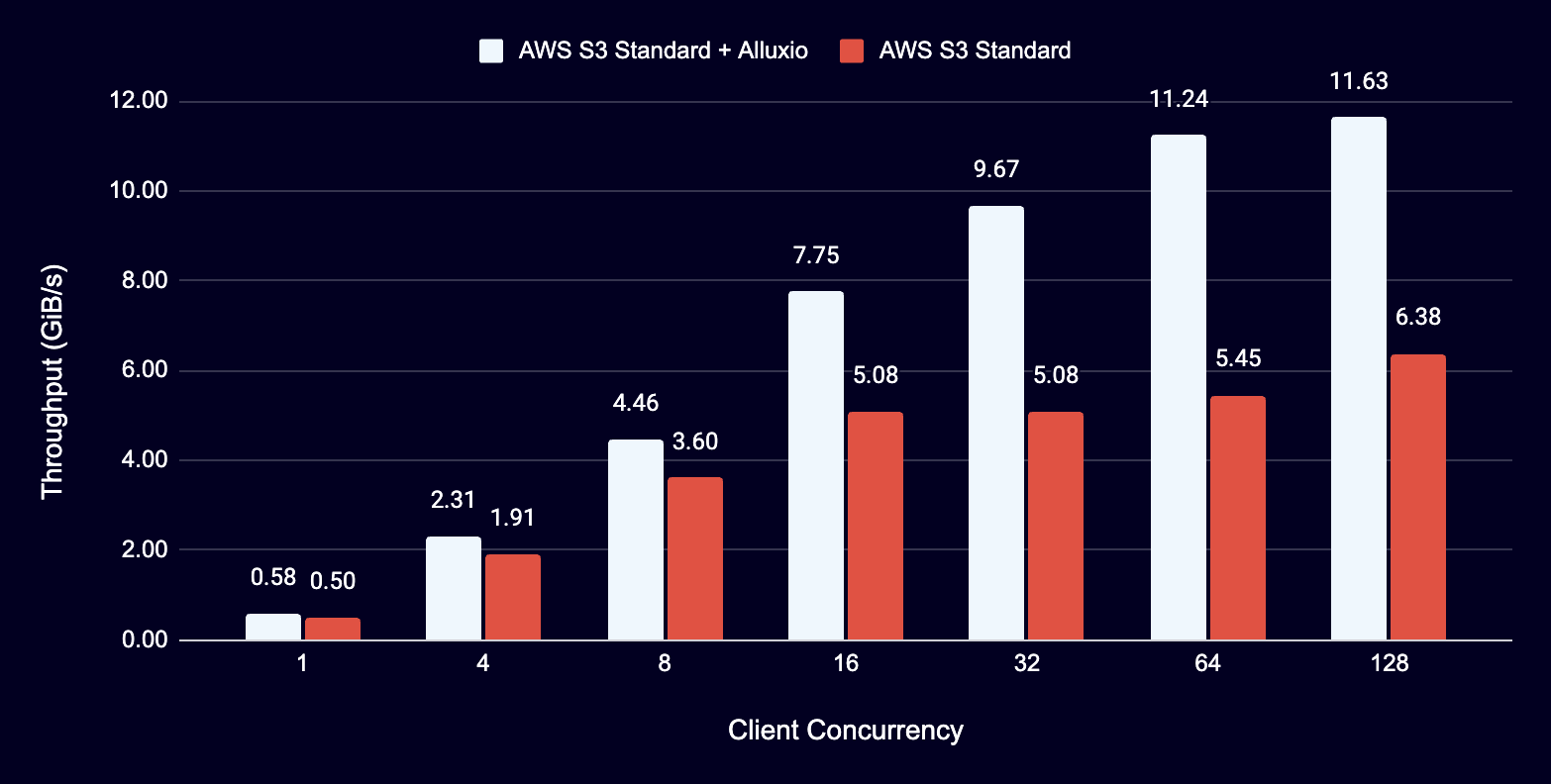

Alluxio takes a different approach. It acts as a transparent, distributed caching and augment on top of S3, combining the mountable experience of FSx, the ultra low latency of S3 Express, and the cost efficiency of standard S3 buckets, all without requiring data migration. You can keep your s3:// paths (or mount a POSIX path), point clients at the Alluxio endpoint, and run.

Not strictly, but effectively: Alluxio ≈ FSx + S3 Express One Zone — without the cost or migration overhead.

Why This Matters — and Where S3 Bends

Amazon S3 is the undisputed backbone of cloud storage today, offering 11 9s durability across Availability Zones, auto-partitioning, and ~$23/TB/month pricing (S3 Standard, us-east-1). It stores over 400 trillion objects and handles up to 150 million requests per second (link). Scale is solved.

But as workloads evolve—toward training, inference, agentic memory, OLTP, and real-time analytics—S3’s original design begins to show strain. Technical teams now demand:

- Sub-millisecond SLAs for feature stores, agentic memory, and RAG pipelines

- Efficient support for write-ahead logs and checkpointing large objects

- High-performance metadata operations across millions of objects

And this all ideally happens without giving up S3’s pricing, scalability and operational simplicity.

The friction points in S3's current design include:

- Latency: Read TTFB (e.g., GetObject) in S3 standard buckets commonly lands in the 30–200 ms range—Okay for batch, but painful for inference and transactional access

- Limited Semantics: Rename = copy + delete; append = not supported

- “Bottleneck” in metadata operations: S3 “directories” are prefixes, and listing large ones is expensive

Simply put: S3 is brilliant at being a capacity store, but not a system for real-time and latency-critical workloads, and it doesn’t pretend to be.

So the key question from architects becomes:

“Can I meet modern latency and semantics expectations without replacing or migrating off of S3?”

We believe the answer lies in augmenting, not replacing, S3—and that's where Alluxio comes in.

Alluxio: A Shim Layer Bringing Performance and Semantics on S3

Alluxio is a software layer that transparently sits between applications and S3 (or any object store). It offers both POSIX and S3-compatible APIs. Users can simply mount existing S3 buckets (or any other cloud object store) without any data migration or import. Unlike single-node API-translation tools such as s3fs (link), Alluxio is fully distributed and cloud-native, implementing decentralized metadata and data management.

.png)

Blog

Learn about the new features in Alluxio AI 3.8 designed to eliminate two of the most painful bottlenecks in modern AI pipelines. Introducing Alluxio S3 Write Cache, which dramatically reduces object store write latency and improves write-heavy workload performance, and Safetensors Model Loading Acceleration that delivers near-local NVMe throughput for model weight loading

For write-heavy AI and analytics workloads, cloud object storage can become the primary bottleneck. This post introduces how Alluxio S3 Write Cache decouples performance from backend limits, reducing write latency up to 8X - down to ~4–6 ms for concurrent and bursty PUT workloads.

Oracle Cloud Infrastructure has published a technical solution blog demonstrating how Alluxio on Oracle Cloud Infrastructure (OCI) delivers exceptional performance for AI and machine learning workloads, achieving sub-millisecond average latency, near-linear scalability, and over 90% GPU utilization across 350 accelerators.