Alluxio vs. FSx Lustre:

A Faster, More Scalable File System for AI

Alluxio delivers higher throughput for model training and inference cold starts, while also lowering latency for inference — all with a more flexible, cost-effective, multi-cloud architecture.

Why Choose Alluxio over FSx

Accelerate AI workloads with transparent, high-speed access to your data lake, such as AWS S3. See performance benchmark for details.

Customers have reported 40%+ faster training times, and 80%+ faster model deployment times with Alluxio compared to FSx.

Watch the on-demand webinar for model training case studyRead blog for model deployment case studyAlluxio is designed to optimize I/O performance for AI workloads. Alluxio's total cluster performance increases linearly with the number of Alluxio workers deployed. A single Alluxio worker provides up to 43.48 GB/s read throughput, utilizing 87% of a 400 Gbps network pipe.

See benchmarks for detailsAlluxio co-locates data caching within GPU nodes, maximizing throughput and minimizing latency for training and inference workloads. This proximity boosts GPU utilization above 90% (e.g., 96% in MLPerf) by cutting idle time and I/O bottlenecks. It also leverages idle CPU, memory, and storage on GPU nodes without extra hardware costs, an advantage over FSx Lustre.

Learn more about GPU acceleration

In head to head comparisons with FSx, Alluxio saves 50-80% on storage costs alone. With the high number of IOPS required for AI workloads, Alluxio saves customers even more, because unlike FSx, Alluxio does not charge for IOPS. Alluxio further reduces infrastructure costs by eliminating redundant data movement and the need to over-provision GPUs to accommodate for slow cold starts.

Contact us for a custom cost analysisAlluxio scales AI workloads to 10 billion files per cluster, significantly exceeding FSx Lustre’s typical limit of under 1 billion files. Unlike FSx's centralized metadata approach, Alluxio’s DORA architecture removes bottlenecks, enabling horizontal scaling with linear performance gains. This supports training across thousands of GPUs and efficiently handles billions of small files essential to modern AI pipelines.

Alluxio’s Kubernetes-native operator simplifies deployment in containerized environments, unlike FSx, which requires manual setup.

Alluxio’s transparent data access enables zero-code integration with existing applications and storage, eliminating manual caching from AWS S3/Blob and complex tuning—accelerating time-to-value and reducing operational overhead. Alluxio supports diverse APIs, including POSIX (FUSE), S3, HDFS, and Python, enabling seamless integration across AI pipelines.

Alluxio provides a transparent abstraction between compute and storage across hybrid and multi-cloud environments, unlike FSx, which is limited to the AWS ecosystem.

Alluxio’s unified namespace delivers instant, global access to storage systems like AWS S3, Azure Blob, HDFS, and Google Cloud Storage, simplifying data management and enabling AI training wherever GPUs are available—even with siloed data across regions and clouds.

Learn more about hybrid and multi-cloud capabilities

Want to see how Alluxio compares to FSx in your environment?

Performance Benchmarks

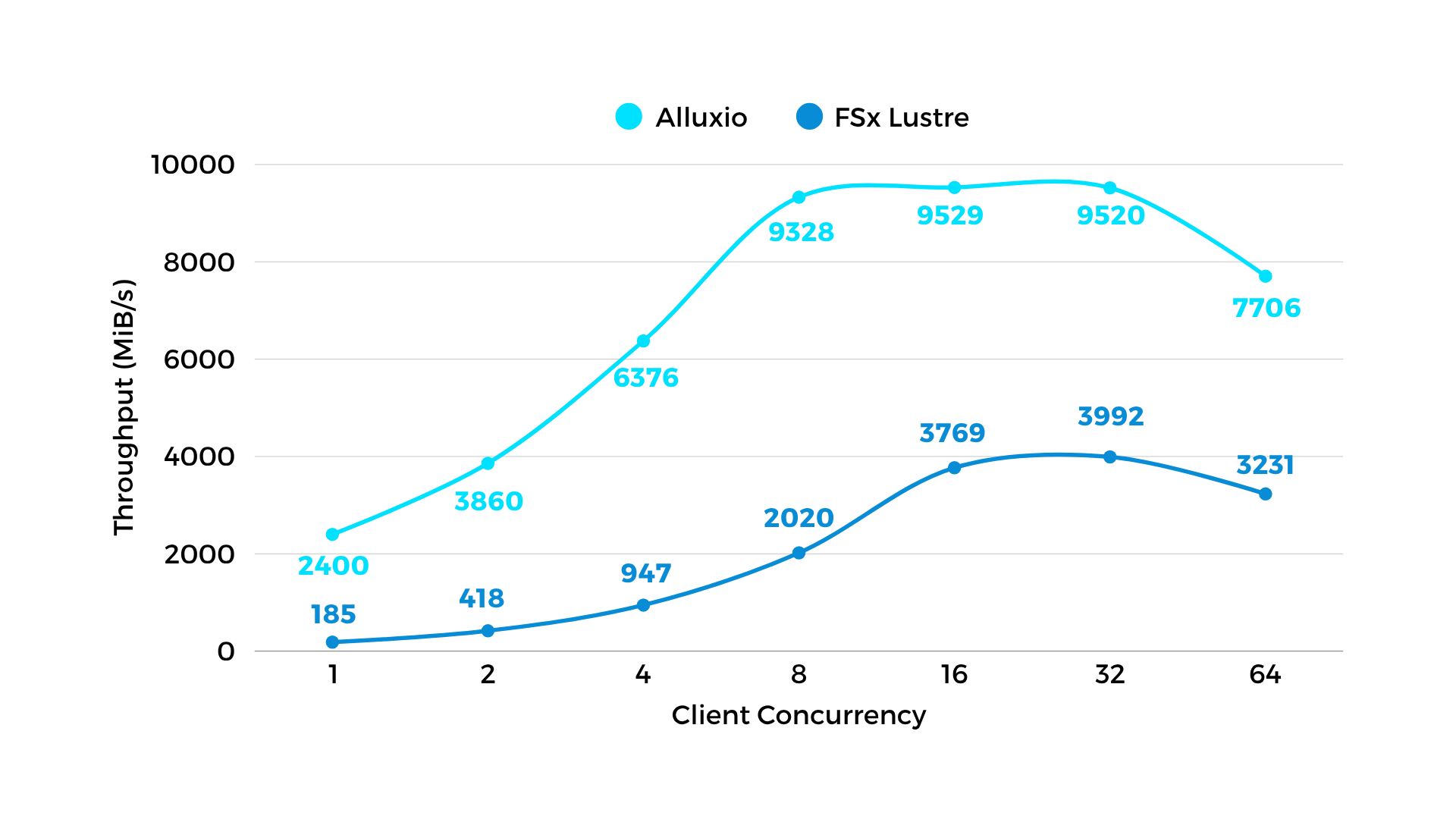

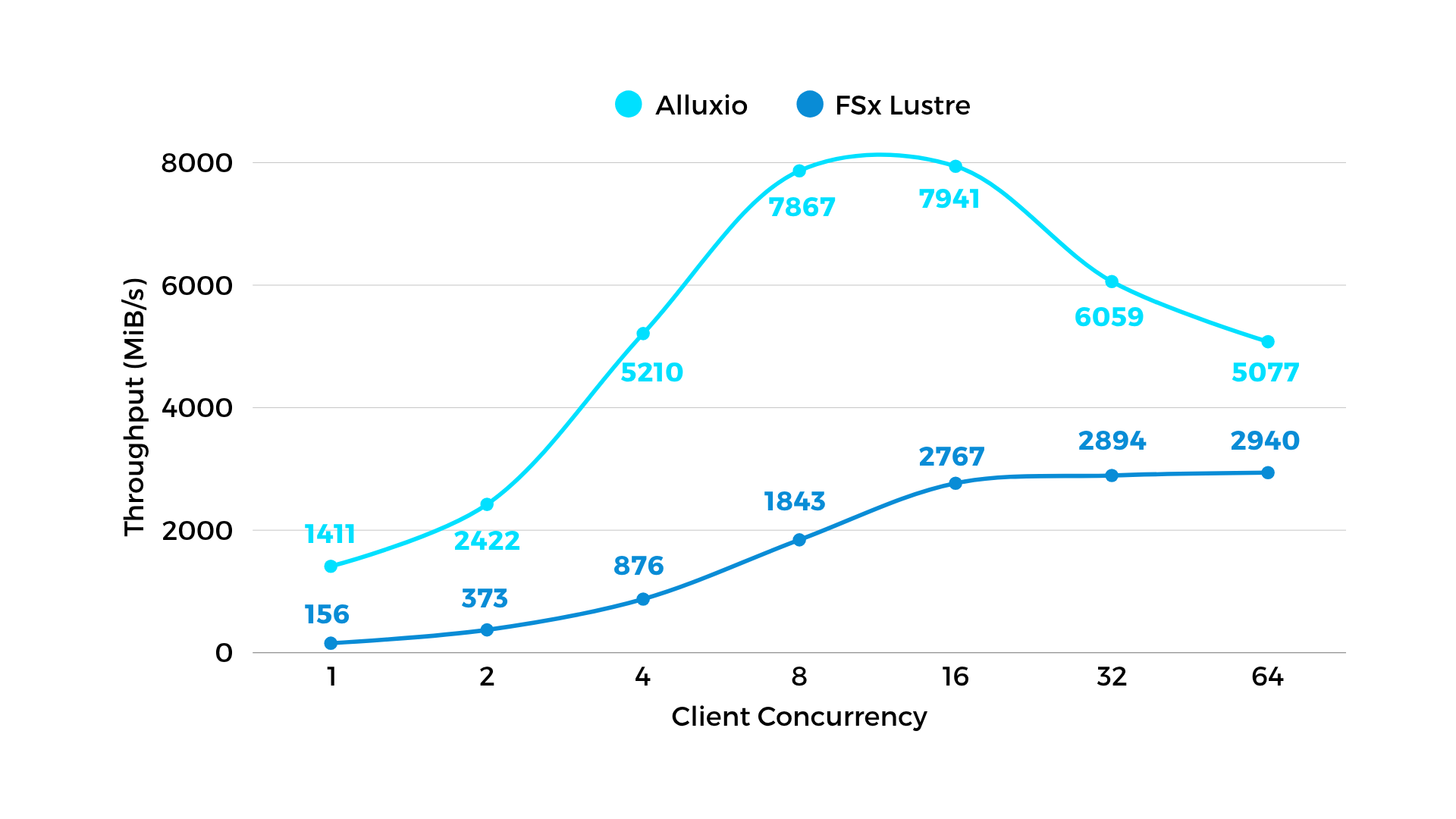

Alluxio’s DORA architecture, uniquely designed to optimize performance for AI model training and model distribution, can achieve 4-11X higher performance over parallel filesystems such as FSx.

Model Training

Alluxio vs. FSx Lustre - Read Throughput

Figure 1: Read throughput - simulating training dataset reading

Alluxio vs. FSx Lustre - Write Throughput

Figure 2: Write throughput - simulating checkpoint writing

Model Distribution

Alluxio (normal) vs. FSx Lustre

Figure 3: Alluxio (light blue line) demonstrates 2x ~ 4x faster model distribution.

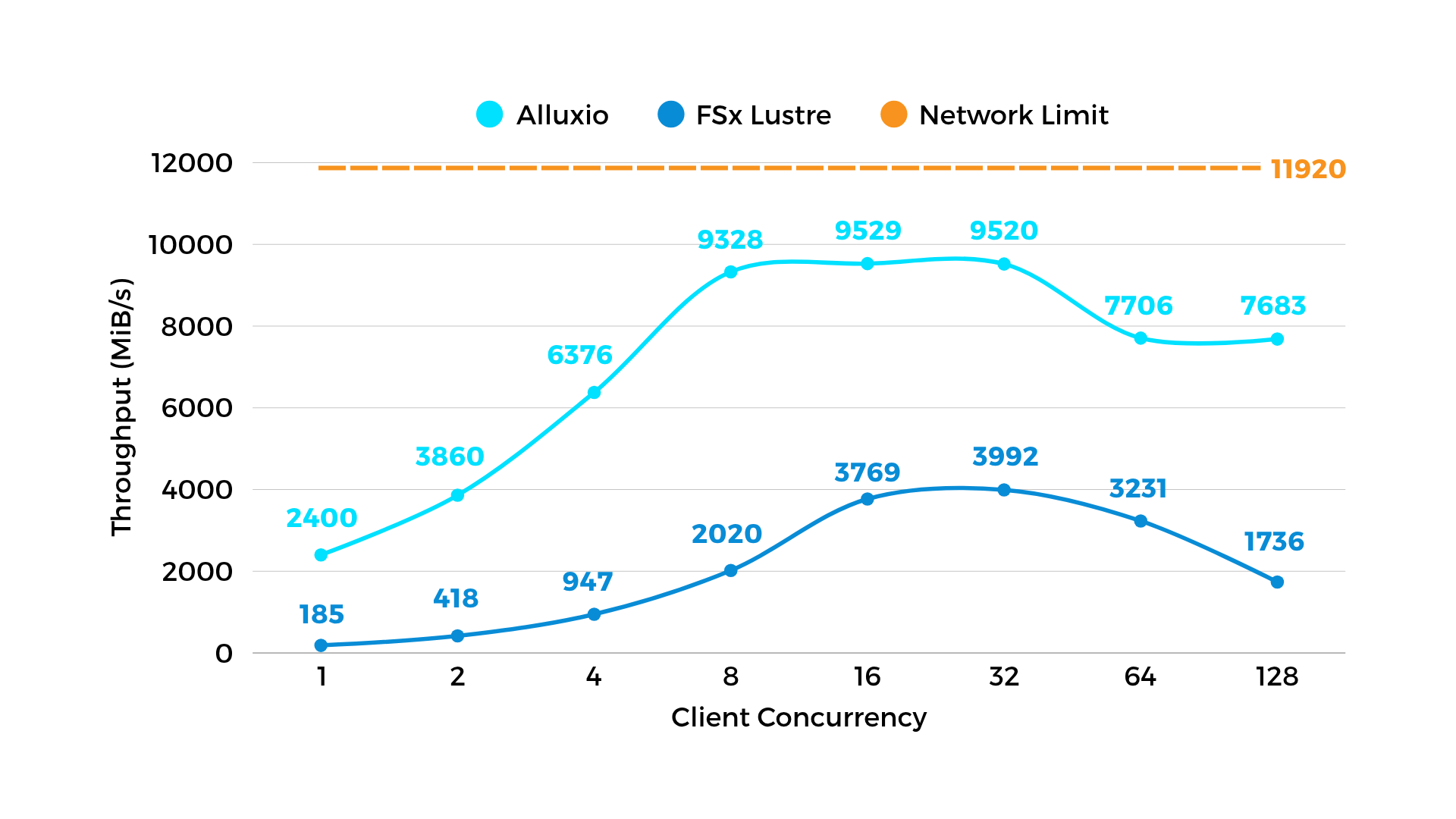

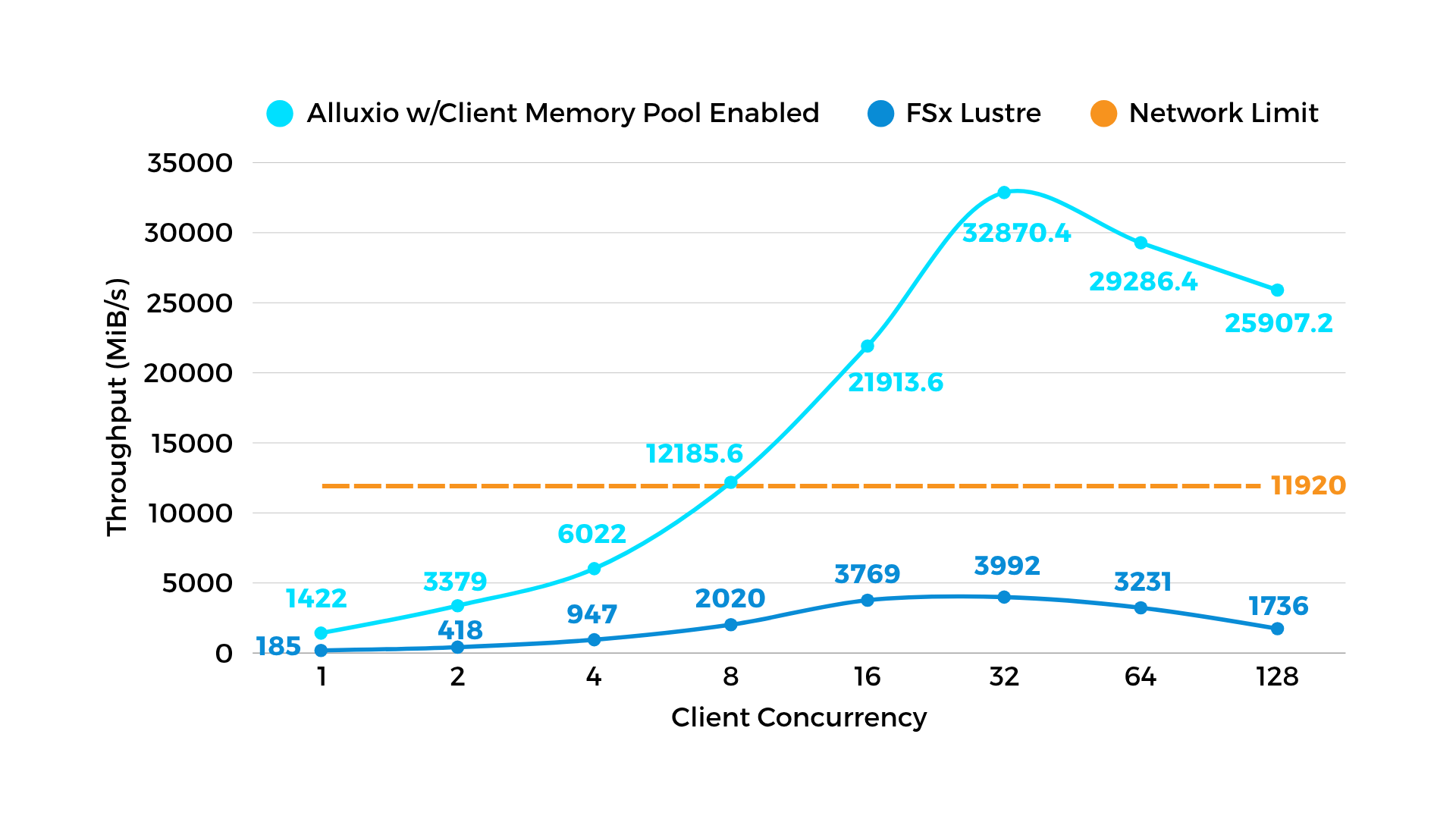

Alluxio w/Client Memory Pool Enabled vs. FSx Lustre

Figure 4: While distributing a single model across multiple GPUs on the same node, Alluxio can enable “client memory pool” (new in AI 3.6) and offer up to 32GiB/s read throughput, which is 3x faster than normal case and breaks network limits.

*Test environment references:

Alluxio

- Version/Spec: Alluxio Enterprise AI 3.6 (50TB cache)

- Test env: 1 FUSE (C5n.metal, 100Gbps network) and 1 Worker (i3en.metal)

FSx Lustre

- Version/Spec: 24TB (Class Type: 1000MiB/s/TiB)

- Test env: 1 FUSE (C5n.metal, 100Gbps network)

Customer Testimonies

"The new distributed caching architecture has improved model training speed, reduced storage costs, increased GPU utilization across clusters, lowered operational overhead, enabled training workload portability, and delivered 40% better I/O performance compared to parallel file systems.”

Alluxio vs. FSx at A Glance

FSx Lustre

Alluxio

Alluxio’s Impact

Network-mounted file system; data pulled over the network

Caches data on local SSDs / memory of GPU servers

Boosts GPU utilization, reduces I/O bottlenecks, no extra hardware cost

Centralized metadata service, a potential throughput bottleneck at large scale

Decentralized metadata management, optimized for AI data workloads

Higher throughput in end to end model training and deployment

Full model reload on cold start

Keeps model files hot on the host; optimized for cold starts across multiple GPUs per node

Shorter idle time, faster deployment

Charges for both capacity and throughput tiers

No IOPS charges

50-80% lower cost

AWS-managed service

Deploy anywhere: AWS, GCP, Azure, on-prem, Kubernetes

Avoids vendor lock-in with the same data access layer across environments

Natively integrates with AWS S3

Connects to AWS S3, GCS, Azure Blob, HDFS, NFS, Ceph, and other object & file stores

Consolidate data from many backends without rewriting workloads

POSIX API only

S3-compatible API and POSIX API

Broader workload compatibility

FAQ

Is Alluxio a storage system like Amazon FSx?

No, Alluxio is not a storage system like Amazon FSx for Lustre. Alluxio is an AI-scale distributed caching platform bringing data locality and horizontal scalability to AI workloads. Alluxio does not offer persistent storage, instead Alluxio has the Under File System concept and leverages your existing data lakes and commodity storage systems. In contrast, Amazon FSx for Lustre is a traditional parallel file system limited to the AWS ecosystem and typically lacks advanced caching or federated data access across storage types.

Can Alluxio read directly from AWS S3?

Yes—Alluxio can connect directly to AWS S3 as an underlying data source. It reads and caches S3 objects on demand, enabling high-throughput, low-latency access without data duplication or manual pre-staging. Unlike FSx for Lustre, which requires staging S3 data into a file system before use, Alluxio provides zero-copy access to S3—eliminating delays and operational overhead.

Why choose Alluxio instead of FSx?

Alluxio is purpose-built to accelerate AI workloads in ways FSx for Lustre cannot. Compared to FSx, Alluxio offers:

- Faster end-to-end model training and deployment by eliminating data staging delays

- High performance that scales linearly across compute clusters and storage tiers

- Improved GPU utilization by minimizing idle time during data loading

Lower total cost of ownership—no IOPS charges and more efficient use of storage - Seamless support for hybrid and multi-cloud environments, not just AWS

Whether you're running training pipelines, inference, or retrieval-augmented generation (RAG), Alluxio delivers intelligent caching and zero-copy access to data in AWS S3 and other data lakes—without the limitations of FSx.

Can I use Alluxio in a Kubernetes environment?

Absolutely. Alluxio offers a Kubernetes-native operator, simplifying deployment and integration in containerized AI platforms. Unlike FSx, it’s built to work smoothly in cloud-native environments.

Do I need to modify my application to use Alluxio?

No. Alluxio provides transparent data access via POSIX (FUSE), S3, HDFS, and Python APIs—so you can integrate it with existing applications without any code changes.

Do I need to have a hybrid or multi-cloud environment in order to get the benefits from Alluxio?

Not at all, you can still benefit from performance gains and cost savings compared to FSx even if you are all in a single cloud, such as AWS.

How does Alluxio pricing compare to Amazon FSx for Lustre pricing?

In head to head comparisons with FSx, Alluxio can save 50-80% on storage costs alone. Additionally, unlike FSx, Alluxio does not charge for IOPS, which can be high. Contact us for a custom quote.

What are Alluxio’s top workloads and industries?

Alluxio is designed for AI workloads including, Gen AI, LLM training and inference, multi-modal, autonomous systems and robotics, agentic systems and more. Alluxio powers AI platforms across industries from fintech, autonomous driving, embodied AI, robotics, inference-as-a-service, social media content platforms, enterprise AI and more.