Fireworks AI, a leading inference cloud provider, successfully eliminated its LLM cold start problem by implementing Alluxio's distributed data caching solution. The implementation resulted in dramatically improved model loading speeds and reduced cloud egress costs across multiple GPU clouds. With Alluxio, Fireworks AI's high-speed, globally distributed inference solution reduced model loading times from hours to minutes, directly improving the customer experience.

About Fireworks AI

Fireworks AI is a leading inference cloud provider for generative AI. From real-time inference to model optimization, Fireworks AI provides the platform that empowers developers and enterprises to deploy, scale, and innovate with cutting-edge AI.

Infrastructure is at the core of this company, powering inference and fine-tuning services for customers' real-time applications that require minimal latency, high throughput, and high concurrency. Their GPU infrastructure spans 10+ clouds and 20+ regions for high availability for customers. At scale, Fireworks AI handles over 200,000 queries per second and serves 10+ trillion tokens per day.

The Challenge

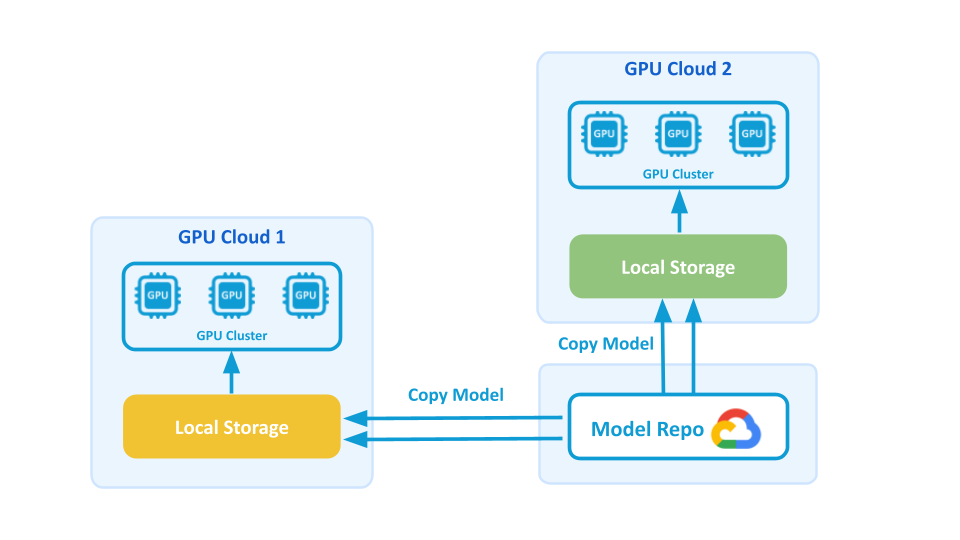

Fireworks AI’s infrastructure is architected with a separation of compute and storage model:

- Model Storage: Model weights are stored in Google Cloud Storage (GCS) and other S3-compatible storage

- Compute Infrastructure: GPU clusters spanning multiple clouds, including Google Cloud and more

Models are regularly pulled down to the GPU nodes for inference operations. Fireworks AI's engineering team had built a custom solution that downloaded model files from their primary object storage (GCS), cached them into regional object storage closer to inference regions, and used a basic per-node cache. However, this approach proved insufficient for their scaling requirements and performance expectations.

Technical Challenges

- Highly Concurrent Model Loading: Fireworks AI faces highly spiky and concurrent downloading of the same hot large files (models) across tens of GPU clouds. A single deployment can have anywhere from 10 to 100+ replicas, with each replica requiring a full copy of the model files. Hundreds of GPU servers download the same files (70GB to 1TB each) simultaneously within a single cluster, creating massive bandwidth demands and bottlenecks.

- Cold Start Problem: Slow download speeds result in high latency during initial model loading. Replica startup time was bound by inbound network bandwidth, which varies significantly across cloud providers and is typically much slower than internal cluster bandwidth. Downloading large models to 200+ GPU nodes could take 20+ minutes, and in some cases, over an hour due to GCS throttling.

- Manual Pipeline Management: Synchronizing many replicas that started at the same time, provisioning and managing regional object-storage caches, and manually coordinating full initialization created significant operational overhead and a lot of manual toil.

- Scalability Concerns: With hypergrowth, Fireworks AI realized they would need a dedicated engineer to observe the model distribution pipeline to ensure reliability.

Business Challenges

- Customer Experience Impact: Slow or unstable cold starts were critical business pain points for customers. Although the impact of faster model load speeds on revenue is difficult to quantify, an improved user experience positively impacts customer retention, renewal ARR, PoC conversions, and overall brand reputation.

- Egress Cost Burden: Fireworks AI spends tens of thousands of dollars on GCS egress fees annually, with significant costs from both regional object storage and primary GCS storage.

- Engineering Resource Waste: GCS rate limits result in wasted engineering time dedicated to babysitting model loads for several hours per week.

Solution with Alluxio

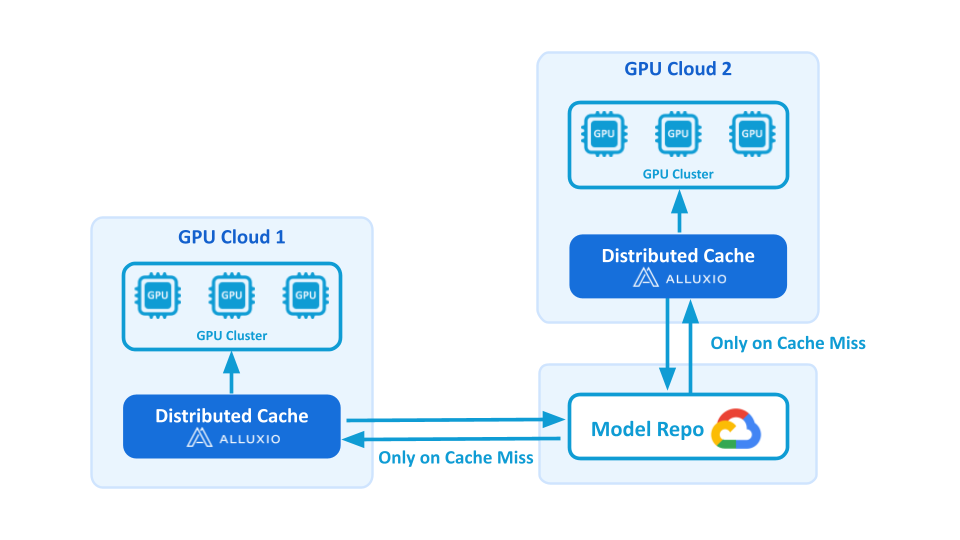

Fireworks AI implemented Alluxio's distributed data caching solution with the following architecture:

- Co-located Deployment: Alluxio cache co-located within GPU nodes. Alluxio runs on the same physical hosts as the GPUs, taking advantage of the powerful CPUs on GPU nodes, the extremely high in-cluster bandwidth, and the fast local SSDs for storage and caching.

- Efficient Model Distribution: The same model loads into Alluxio only once, and then thousands of GPU cards read from Alluxio to download the model simultaneously. On a cache miss, Alluxio fetches from the model repository; subsequent reads are cache-only.

- Seamless Integration: Alluxio fits naturally into the architecture as a caching layer between object storage and GPU workloads without requiring major application rewrites. Everything runs on Kubernetes, with easy management for configuration updates and rollouts across clusters.

Key Metrics and Values

Performance Improvement

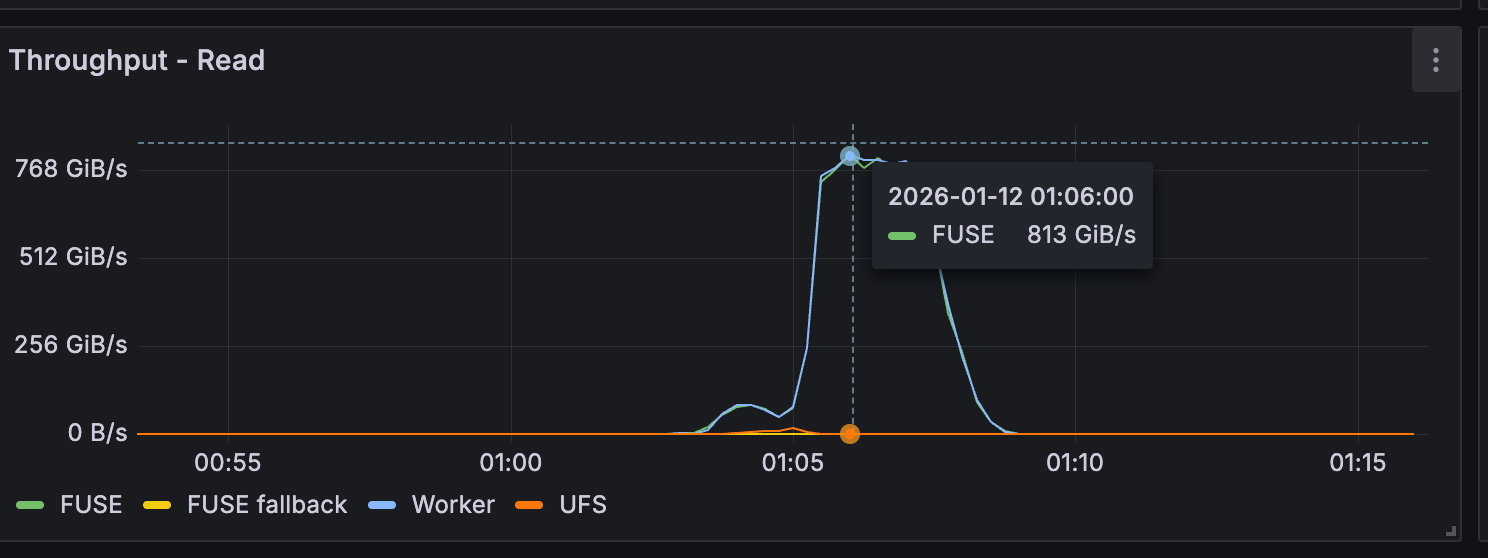

By adopting Alluxio, Fireworks AI eliminated cold start delays and reduced model loading times from hours to minutes, directly improving the customer experience through faster and more reliable model loading. With Alluxio, Fireworks AI now meets its 1 TB/s+ model deployment throughput requirements, shortening deployment to minutes across multi-cloud GPU infrastructure.

Key performance in production:

- Aggregate Throughput: 800 GB/s to 1+ TB/s across all replicas

- Single Replica Throughput: 8–10 GB/s average, with peaks up to 14 GB/s

- Cache Hit Ratio: 90–100% cache hit rates in production

- Model Load Time: Reduced from 20+ minutes (sometimes over an hour) to 2–3 minutes per replica for 800GB+ models. A 750GB model can finish loading in about 1 minute under optimal conditions.

- Daily Data Served: Some clusters serve approximately 2 petabytes of data per day

Business Impact

- Reduced Engineering Overhead: Eliminated 4+ hours per week of manual pipeline management

- Cost Savings: Approximately 50% reduction in egress costs, translating to tens of thousands of dollars in annual savings from both GCS and eliminated regional object storage buckets

- Improved Customer Experience: Faster, more reliable model loading translates to better user experience

- Enhanced Scalability: Solution scales with Fireworks AI's hyper-growth trajectory without requiring dedicated engineering resources

Summary

By implementing Alluxio, Fireworks AI successfully transformed its model distribution architecture from a manual, error-prone process into an automated, high-performance system. Alluxio addresses Fireworks AI's technical challenges of cold start latency while delivering meaningful business value through improved customer experience, cost reduction, and engineering efficiency gains.

"Before Alluxio, we were spending hours every week manually managing model distribution pipelines, and our cold start times," said Akram Bawayah, Software Engineer at Fireworks AI. "With Alluxio's distributed caching, we've eliminated those cold start delays. What used to take hours now takes minutes. The solution scales seamlessly with our growth, and we've freed up our engineering team to focus on building features instead of overseeing infrastructure."

The implementation demonstrates how Alluxio's distributed caching technology can solve critical infrastructure challenges for AI platform providers operating at scale across multiple cloud environments. For Fireworks AI, this solution enables them to focus engineering resources on core product development rather than infrastructure maintenance, while delivering the high-performance, low-latency experience their customers demand.

.png)