Introduction to Hadoop Distributed File System (HDFS)

What is HDFS?

Here’s a general overview. Hadoop Distributed File System (HDFS for short) is the primary data storage system under Hadoop applications. It is a distributed file system and provides high-throughput access to application data. It’s part of the big data landscape and provides a way to manage large amounts of structured and unstructured data. HDFS distributes the processing of large data sets over clusters of inexpensive computers.

Some of the reasons why you might use HDFS:

- Fast recovery from hardware failures – a cluster of HDFS may eventually lead to a server going down, but HDFS is built to detect failure and automatically recover on its own.

- Access to streaming data – HDFS is built for high data throughput, which is best for streaming access to data sets.

- Large data sets – For applications that have gigabytes to terabytes of data, HDFS provides high aggregate data bandwidth and scales to hundreds of nodes in a single cluster.

- Portability – HDFS is portable across hardware platforms and is compatible with many underlying operating systems.

What is HDFS in cloud computing?

HDFS is deployed on-premise. As more companies want to move to the cloud, they have found it’s challenging to make on-prem HDFS accessible in the cloud for a number of reasons:

- Accessing data over WAN is too slow

- Copying data via DistCP from on-prem to cloud means maintaining duplicate data

- Using other storage systems like S3 means expensive application changes

- Using S3 via the Hadoop HDFS connector leads to extremely low performance

- The growing amount of data and queries makes it hard to scale on-prem HDFS clusters

Data orchestration technologies can help solve these challenges and make on-prem HDFS available in the cloud.

What are the key features of HDFS?

Key HDFS features include:

Distributed file system: HDFS is a distributed file system (or distributed storage) that handles large sets of data that run on commodity hardware. You can use HDFS to scale a Hadoop cluster to hundreds/thousands of nodes.

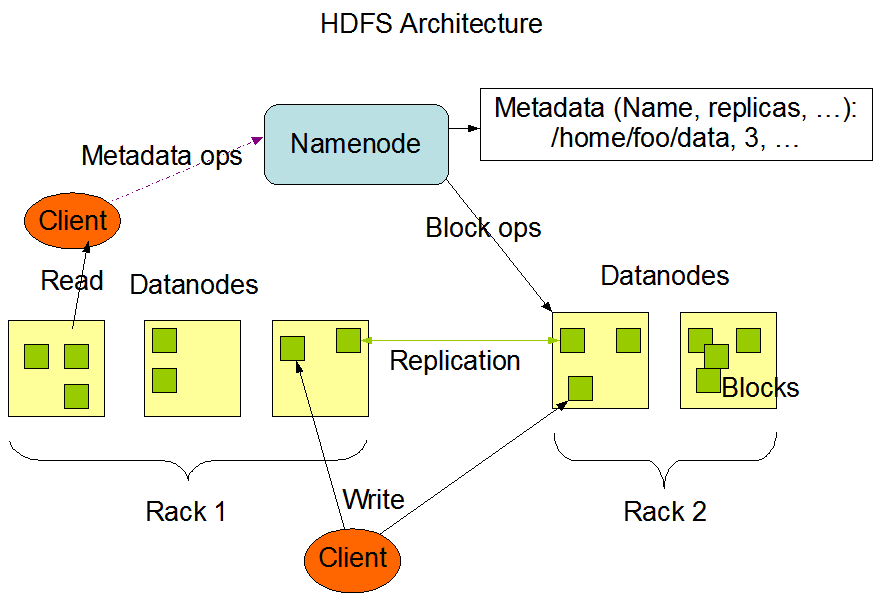

Blocks: HDFS is designed to support very large files. It splits these large files into small pieces known as Blocks. These blocks contain a certain amount of data that can be read or write, and HDFS stores each file as a block. By default, block size is 128MB (but you can change that depending on your requirements). Hadoop distributes blocks across multiple nodes.

Replication: You can replicate HDFS data from one HDFS service to another. Data blocks are replicated to provide fault tolerance, and an application can specify the number of replicas of a file. The replication factor can be specified at file creation time and can be changed later. Files in HDFS are write-once and have strictly one writer at any time. The NameNode makes all decisions regarding replication of blocks and will receive a Heartbeat and a Blockreport from each of the DataNodes in the cluster.

Data reliability: HDFS creates a replica of each data block that’s on the nodes in any given cluster, providing fault tolerance. If a node fails, you can still access that data on other nodes that contain a copy of the same data in that HDFS cluster. By default HDFS creates three copies of blocks.

What are NameNodes and DataNodes and their function?

NameNode is the master server that manages the HDFS namespace and regulates access to files by clients. It executes operations like opening/closing/renaming files and directories, and maps data blocks to DataNodes.

DataNode manages the storage attached to nodes that they run on and serve read/write requests from the file system’s clients. There’s usually one of these per cluster. Files are split into data blocks and stored on sets of DataNodes.

Here’s a sample architecture from Apache Hadoop:

What are Hadoop daemons?

Daemons are the processes that run in the background. There are four primary daemons: NameNode, DataNode, ResourceManager (runs on master node for YARN), NodeManager (runs on slave node for YARN).