Get Faster Machine Learning Across Data Centers and Clouds

Got data-hungry ML workloads? Great.

Now accelerate them with Alluxio, the leading open source data orchestration platform for large-scale AI/ML and analytics applications.

Alluxio improves I/O efficiency for data loading and preprocessing stages of AI/ML training pipelines to reduce end-to-end training times and costs. With Alluxio, data loading from cloud storage, data caching, and training can be done in parallel — providing up to a 8-12x performance boost. Together with the latest-generation Intel® Xeon® Scalable Processors and Intel® Optane™ persistent memory, you can take ML performance to new heights.

Accelerate Data Access for End-to-End Machine Learning Training Pipelines

Alluxio enables separation of storage and compute while advanced caching and data tiering enables faster and lower-cost data access for ML workloads across on-prem, hybrid, and multi-cloud environments.

Bring Data Closer to ML Frameworks

Data locality

Bring your data closer to compute.

Make your data local to compute for faster TensorFlow, PyTorch, and Spark ML processing.

Data Accessibility

Make your data accessible.

No matter if it sits on-prem or in the cloud, HDFS or S3, make your files and objects accessible in many different ways.

Data On-Demand

Make your data as elastic as compute.

Effortlessly orchestrate your data for compute in any cloud, even if data is spread across multiple clouds.

PROVEN AT SCALE

Alluxio is in use by eight of the top ten largest Internet companies, including Facebook, Uber, TikTok, Walmart, Tencent, Comcast, and more. Alluxio’s free Community Edition and Alluxio Enterprise Edition are also used in production at thousands of the most data-intensive companies in finance, retail, communications, pharmaceuticals, and more.

Key Technical Features

Compute-focused

Support for hyperscale workloads

Supports a billion files and thousands of workers and clients, all with high-availability.

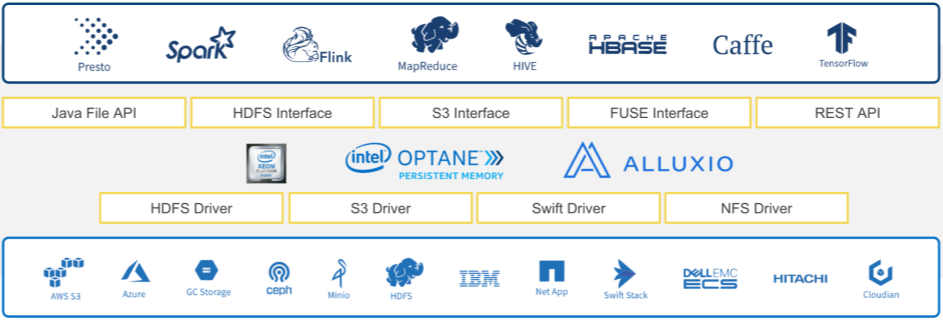

Flexible APIs

Integrates your compute frameworks like Spark, Presto, TensorFlow, Hive and more out-of-the-box using the HDFS, S3, Java, RESTful, or POSIX-based APIs.

Intelligent data caching and tiering

Automatically utilizes near-compute storage media for optimal data placement based on data topology and workload.

Storage-focused

Built-in data policies

Provides highly customizable data policies for persistence, cross storage data migration, and distributed load.

Plug and play under stores

Integrates your under store systems like HDFS, S3, Azure Blob Store, Google Cloud Store and more through a range of interfaces.

Transparent unified namespace for file system and object stores

Mounts multiple storage systems into a single consolidated namespace for both read and write workloads.

Enterprise-ready

Security

Provides data protection on the wire and in the cloud with built-in auditing, role-based access control, LDAP, active directory, and encrypted communications.

Monitoring and management

Provides a user-friendly web interface and command line tools, allowing users to monitor and manage their cluster.

Enterprise high availability with tiered locality

Includes adaptive replication across regions and zones to maximize performance and availability.

Alluxio + Intel: Better Together

Accelerate Machine Learning with Alluxio and Latest-Gen Intel® Xeon® Scalable Processors

1.2-1.25x better price/performance

3rd Gen Intel® Xeon® Scalable Processors compare to the prior generation available on AWS

GET MORE FACTS

Accelerating analytics workloads with Alluxio data orchestration and Intel® Optane™ persistent memory

Ultra Fast Deep Learning in Hybrid Cloud using Intel Analytics Zoo & Alluxio

Accelerating Queries on Cloud Data Lakes

Enabling Hybrid Cloud Analytics and AI with Data Orchestration

Intel: How to Use Alluxio to Accelerate Big Data Analytics on the Cloud and New Opportunities with Persistent Memory

Ultra Fast Deep Learning in Hybrid Cloud Using Intel Analytics Zoo & Alluxio