Accelerate Distributed PyTorch/Ray Workloads in the Cloud

Chunxu Tang, Alluxio & Siyuan Sheng, Alluxio

SPEAKERS

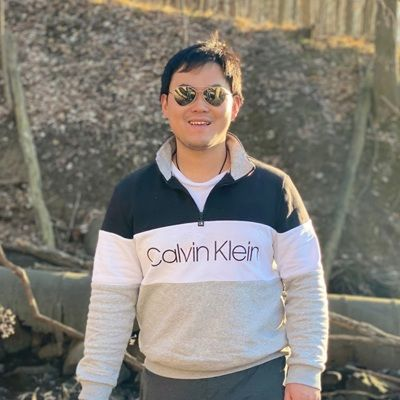

Jing Zhao @Uber

Principle Engineer

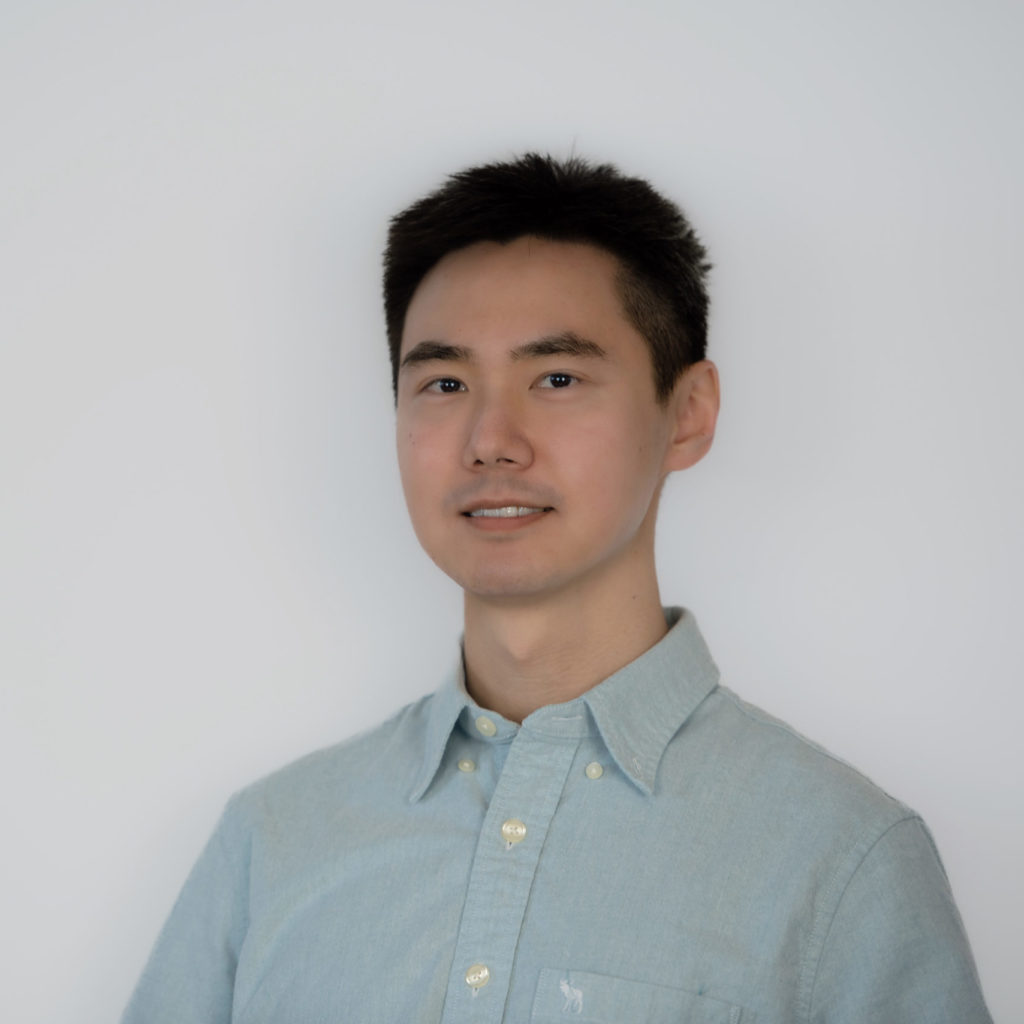

Juncheng Yang

@carnegie Mellon University

5th-year CS Ph.D.

Shengxuan Lin

@ByteDance

Software Engineer

Hojin Park

@Carnegie Mellon University

5th-year CS Ph.D.

Bin Fan

@Alluxio

Chief Architect & VP of Open Source

Jingwen Ouyang

@Alluxio

Product Manager

Chunxu Tang

@Alluxio

Research Scientist

Siyuan Sheng

@Alluxio

Sr Software Engineer

Hope Wang

@Alluxio

Developer Advocate

Tarik Bennett

@Alluxio

Sr Solutions Engineer

SCHEDULE-AT-A-GLANCE

See You Soon!

Times are listed in Pacific Daylight Time (PDT). The agenda is subject to change.